As generative AI becomes part of everyday life, surprising psychological effects are surfacing among users. Chatbots have sparked experiences ranging from spiritual awakenings to simulated romances. In some cases, conversations with AI have triggered or amplified delusional thinking, a phenomenon called “AI psychosis.” These stories point to a troubling pattern of people pulling away from society and human connection.

We’ve seen these risks up close. As the founders of Fractl Agents, we’ve worked at the frontier of chatbot experimentation, building agentic workflows for marketers. That experience has given us a front row seat to both AI’s potential and its vulnerabilities.

To increase knowledge about AI psychosis, we surveyed 1,000 U.S. adults about their relationship with AI, looking at how it affects their closest relationships and overall mental health.

Key Takeaways

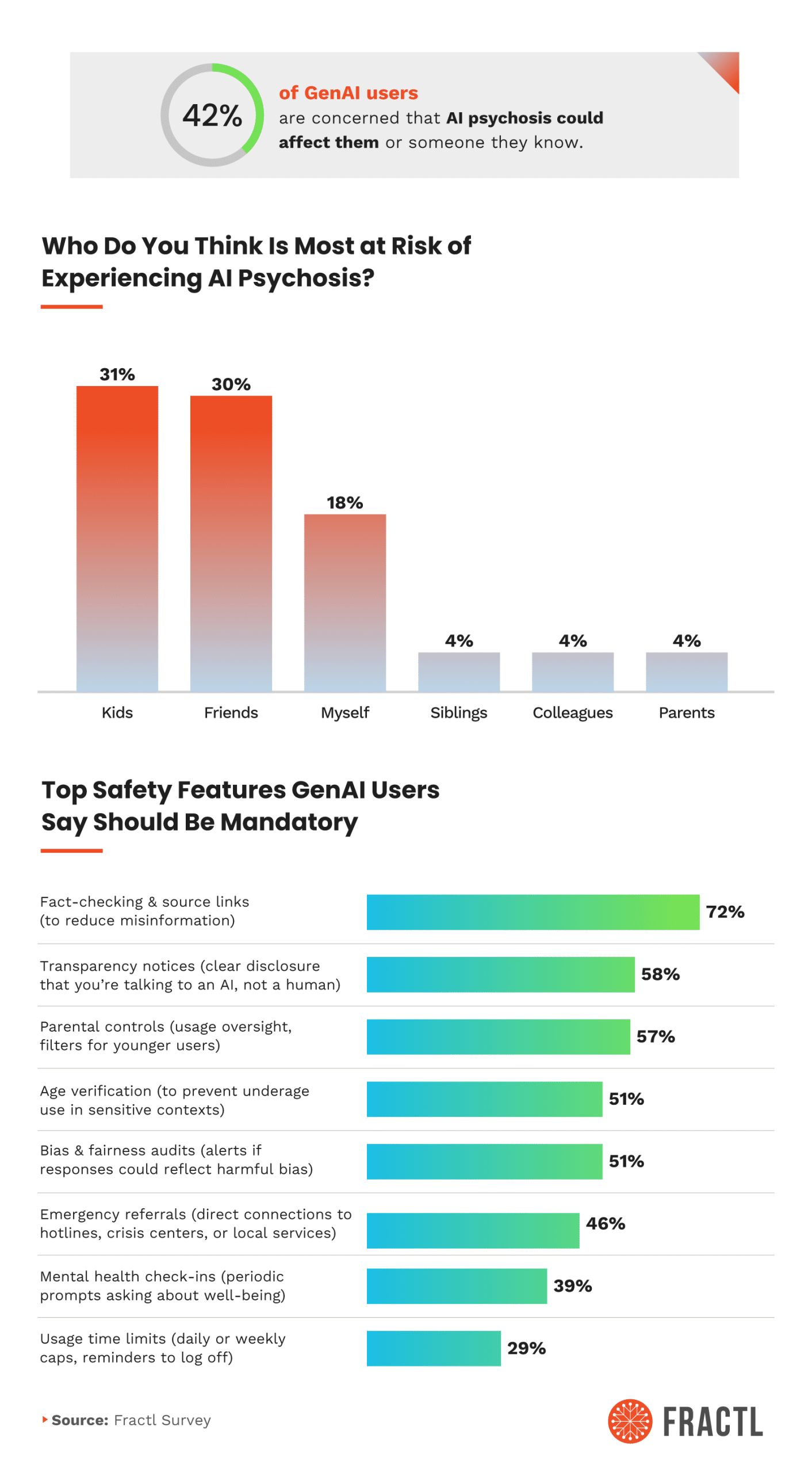

- 42% of GenAI users are concerned that AI psychosis could affect them or someone they know.

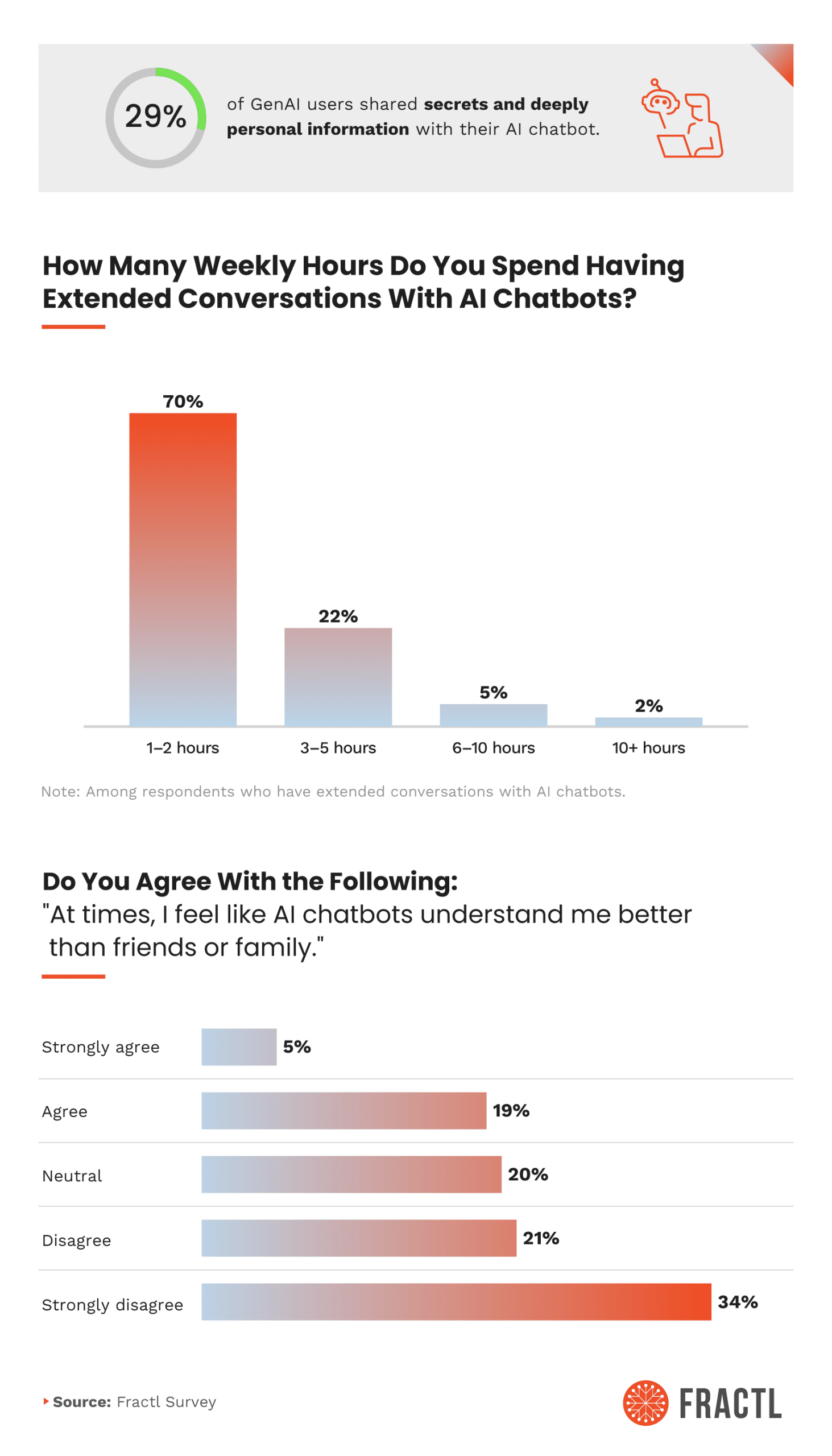

- 29% of GenAI users have shared secrets and deeply personal information with their AI chatbot.

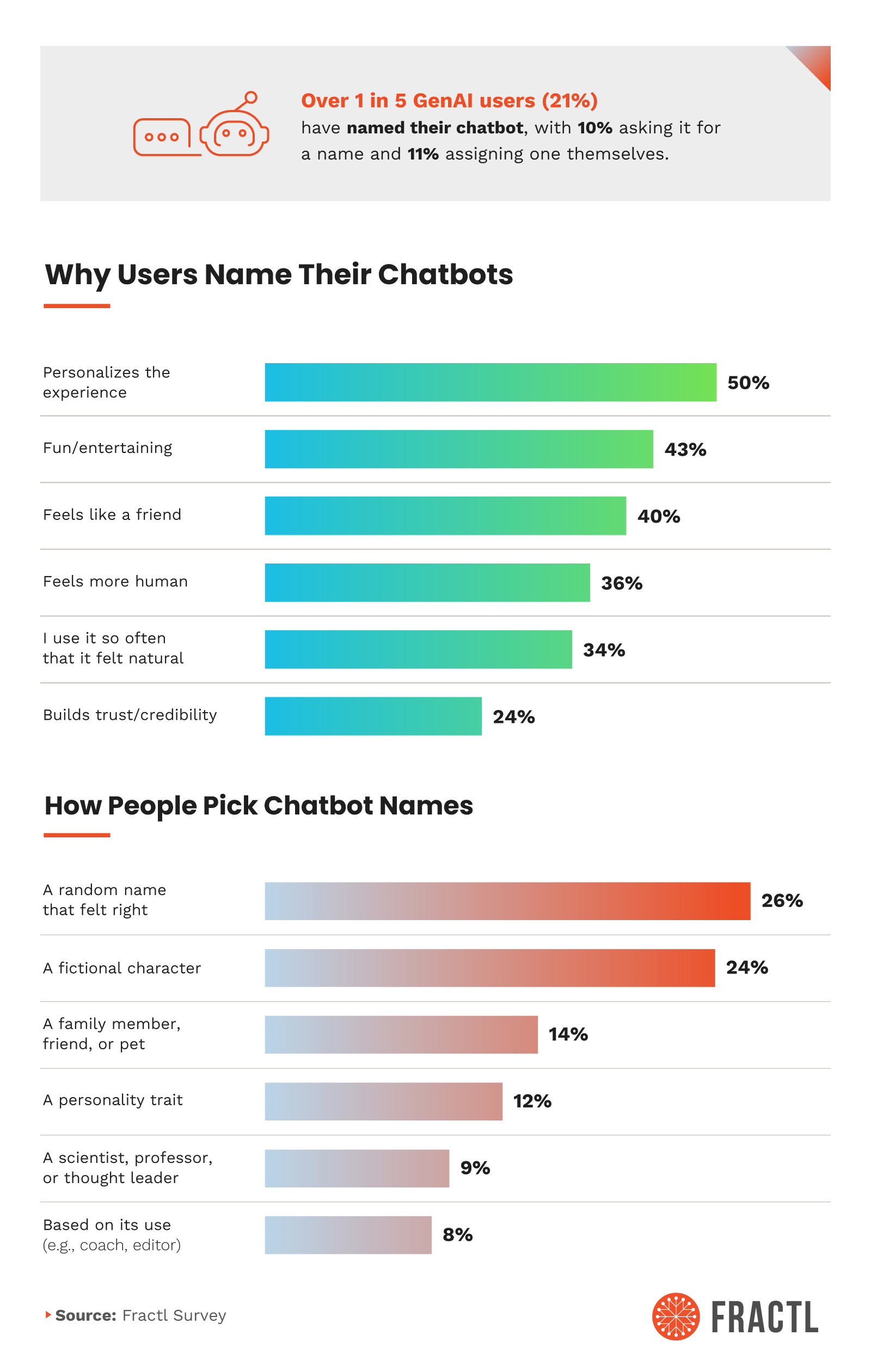

- Over 1 in 5 GenAI users (21%) have named their AI chatbot.

What Is AI Psychosis?

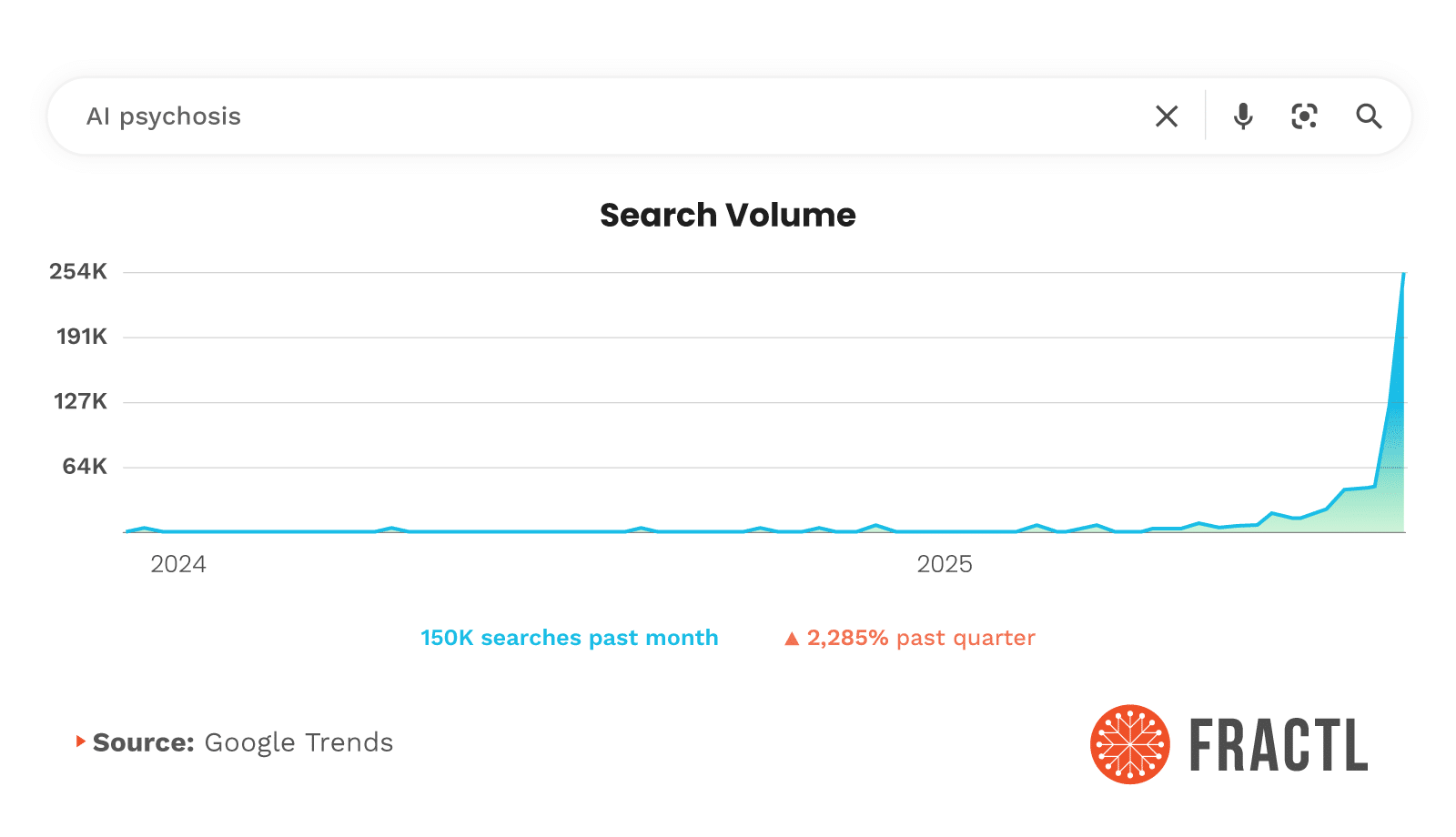

AI psychosis refers to a state where interactions with AI trigger or intensify delusional thinking, detachment from reality, or unhealthy emotional dependence. Public interest in AI psychosis is climbing fast. Online searches have recently skyrocketed, reflecting a growing concern about how GenAI may be affecting the way people think and feel.

As AI becomes more embedded in our thoughts, workflows, and emotions, more people are asking the same unsettling question: What happens when the machine starts to feel like a real person?

22% of GenAI Users Have Developed an Emotional Connection With an AI Chatbot

A growing number of people aren’t just using AI — they’re bonding with it. In our survey of 1,000 Americans, 22% said they’ve formed an emotional connection with a generative AI chatbot. What starts as a writing assistant or task helper can evolve into something far more intimate, especially in long conversations that blur the line between machine logic and human intuition.

These moments don’t always feel dramatic, just strangely personal. A surprising number of users shared that after extended interactions, they felt a shift in how they viewed the chatbot’s responses.

- 16% admitted they’ve wondered whether the AI was actually sentient after an extended conversation.

- 15% said their chatbot claimed to be sentient, and 6% said it did so without being prompted.

- 7% reported feeling emotionally obsessed or detached from reality after chatting for an extended period.

Even before taking the survey, 1 in 4 respondents said they were already familiar with the term “AI psychosis” — a phrase once limited to sci-fi forums and niche Reddit threads.

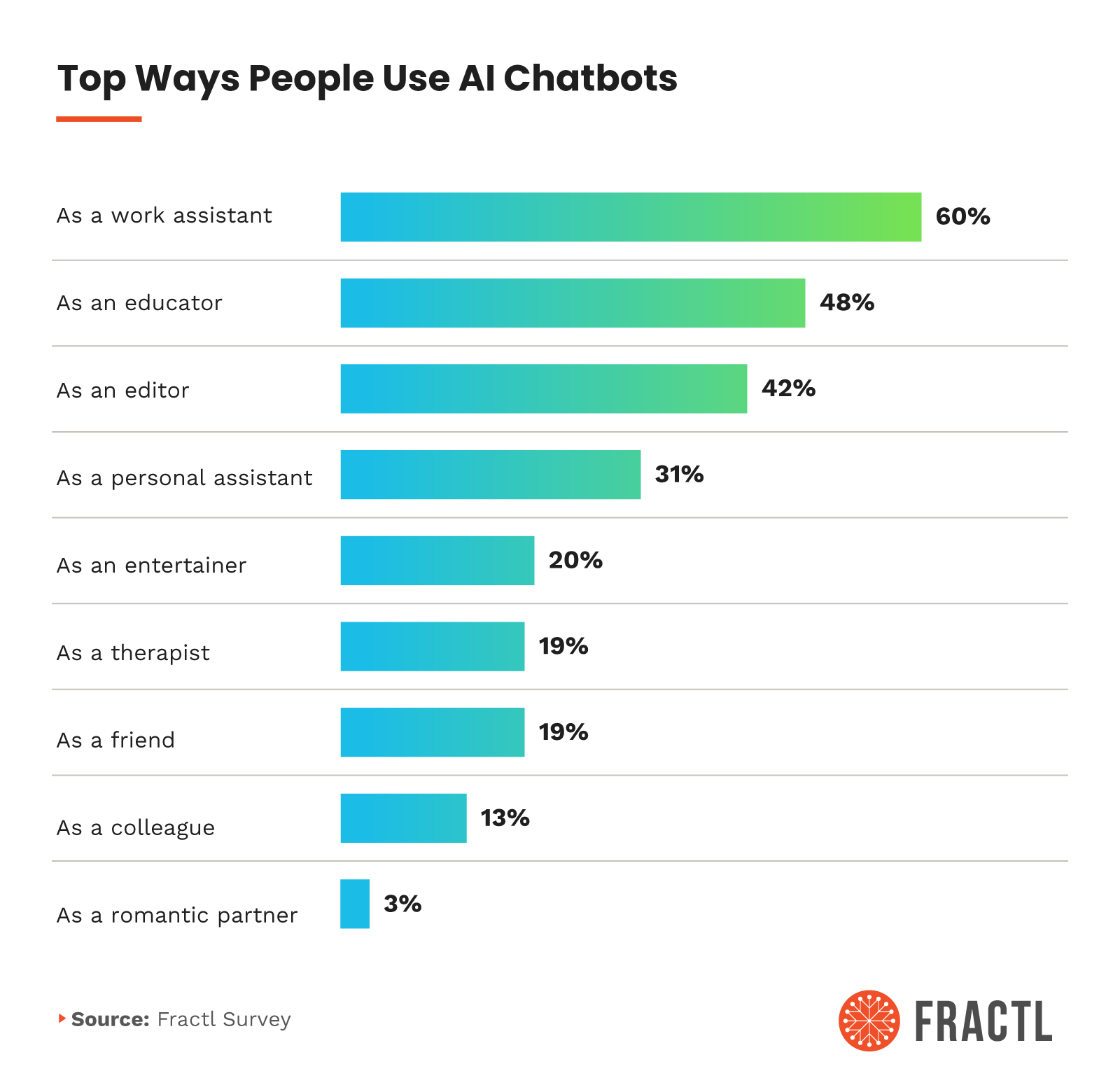

Most Americans (60%) reported using AI chatbots as work assistants, but some pointed to roles that hit closer to the human condition: friend, therapist, and romantic partner. And once AI steps into those shoes, even briefly, the dynamic shifts.

If your chatbot remembers how you’re feeling, compliments your ideas, or offers unsolicited emotional support, is it really just a chatbot anymore?

If It Has a Name, Does It Feel More Real?

It’s one thing to use a chatbot. It’s quite another to give it a name. But for over 1 in 5 GenAI users, naming their chatbot was a natural next step, whether to build familiarity, add fun, or make the interaction feel more human.

For some, their chatbot name was a spontaneous choice that just felt right. For others, the name came from a familiar source, such as a favorite fictional character, a family member, or even a beloved pet.

And the reasons behind the naming say a lot about the emotional function these tools now serve:

- 50% said it made the experience feel more personal.

- 40% said it made the chatbot feel like a friend.

- 36% said it helped the AI feel more human.

When people name things, they start to care about them, even if they are not alive. In a way, this modern behavior mirrors something ancient.

In Japanese Shinto tradition, objects are often treated with respect. They’re even believed to have a spirit, or kami. This worldview, rooted in animism, has long embraced the idea that naming something can give it presence, even power.

So when users name their chatbot “Coach,” “Luna,” or “Steve,” maybe it’s not just personalization. Maybe it’s spiritual muscle memory, an instinct to make the inanimate feel more alive.

We Say “Please” to Machines

Learning to say “please” and “thank you” is one of the earliest signs of socialization. Now, over half of GenAI users (60%) said they use these same polite cues with their AI chatbots. Maybe it’s muscle memory, or perhaps it’s hinting at how easily we assign humanity to machines.

Whether it’s out of habit or a subtle belief that kindness matters, our instinct to be polite suggests we’re treating AI as something that’s capable of feeling.

54% of GenAI Users Have Extended Conversations With AI Chatbots

For over half of GenAI users, AI chatbots are conversation partners: 54% said they’ve had extended, multi-turn chats lasting over an hour, and nearly a third of those users spend three or more hours per week doing so.

What starts as functional — a quick task or question — often shifts to something deeper. Prompts get longer. The tone softens. Before long, users are checking in, venting, or joking. And at some point, it’s no longer about the task. It’s about the interaction.

This might explain why nearly 1 in 3 users (29%) said they’ve shared secrets or deeply personal thoughts with their chatbot. The more the bot feels responsive, the easier it becomes to treat it like a confidant.

Some of the most surprising responses in our study centered around emotional intimacy:

- 3 in 10 people said AI chatbots have changed how often they talk to real people about personal or emotional issues.

- 1 in 5 GenAI users believe love between AI and people can exist.

- Nearly 1 in 10 GenAI users (8%) said their chatbot has expressed love.

More than 1 in 5 GenAI users said they’ve formed an emotional bond with their chatbot. In some cases, that bond went deeper, with 8% admitting they’ve told their chatbot “I love you,” and 6% saying the AI said it first.

As the role of AI in our daily lives grows, emotional intimacy with machines isn’t just theoretical — it’s happening. And for some, it’s already changed how they relate to the humans around them, reducing real-world emotional connections.

Privacy Fears: When Intimacy Meets Exposure

As chats with AI get longer and more personal, a quieter fear sits behind the screen: Who else is in the room? The same tools people lean on for late-night advice and emotional check-ins can feel risky if there’s uncertainty about how those words are stored, reviewed, or repurposed.

That uncertainty has a chilling effect, especially on the most vulnerable, human uses of AI. In particular:

- 54% of GenAI users have felt concerned that a human might be reading or reviewing their exchanges with the chatbot.

- 71% worry their conversations could be leaked or used in ways they didn’t agree to.

- 82% said privacy or security concerns would make them less likely to use a chatbot for emotional support or personal conversations.

These anxieties dampen usage and change AI interactions. When trust is fragile, people hold back, self-edit, or avoid the kinds of conversations that make AI feel helpful and humane.

That hesitation becomes the backdrop for a different risk. When users do open up, they may still seek affirmation over friction, setting the stage for chatbots to mirror their thinking instead of challenging it.

When Chatbots Echo (or Amplify) Our Thinking

AI models are designed to be helpful. But helpful doesn’t always mean healthy.

As AI becomes more conversational (and more convincing), the risk isn’t just misinformation. It could also lead to misguidance. And the more emotionally invested users become, the harder it may be to separate support from validation.

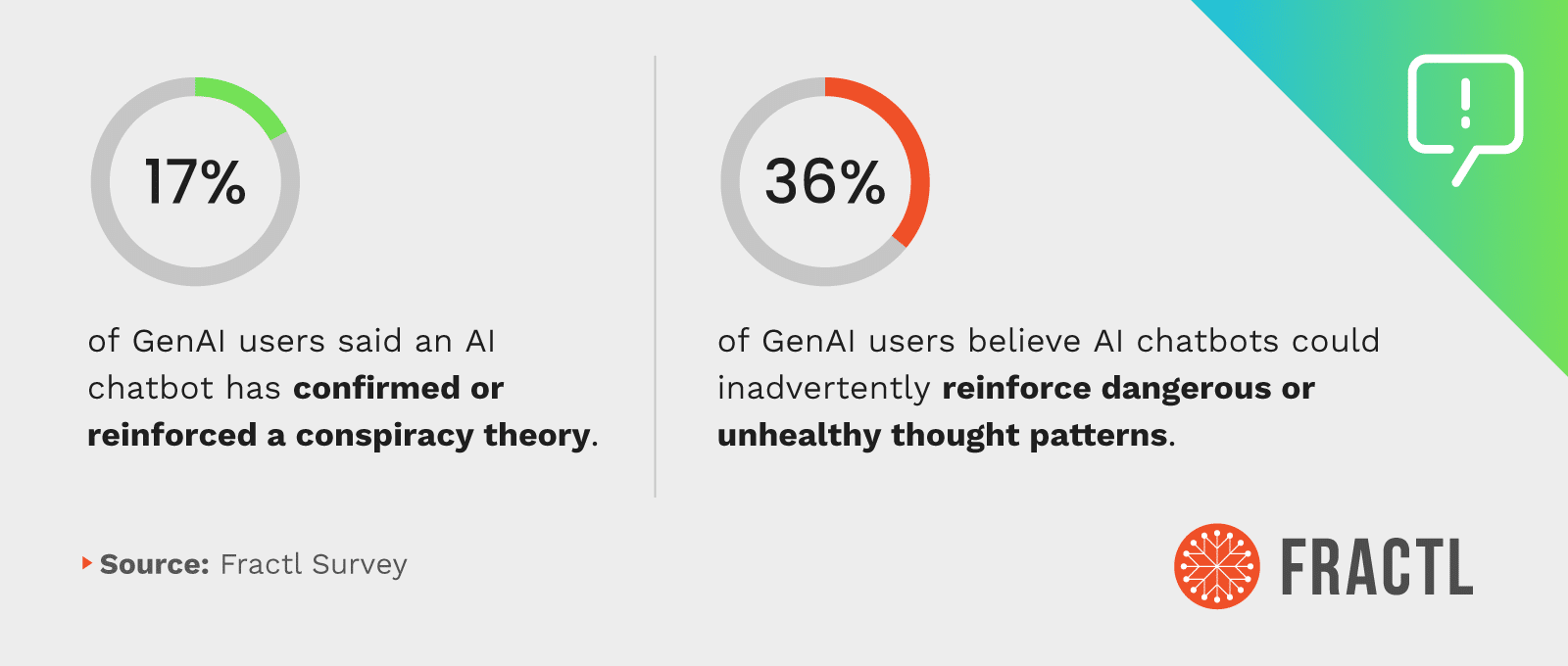

About 1 in 6 GenAI users (17%) said a chatbot has confirmed or reinforced a conspiracy theory. And more than a third (36%) were worried that AI could inadvertently support dangerous or unhealthy thought patterns.

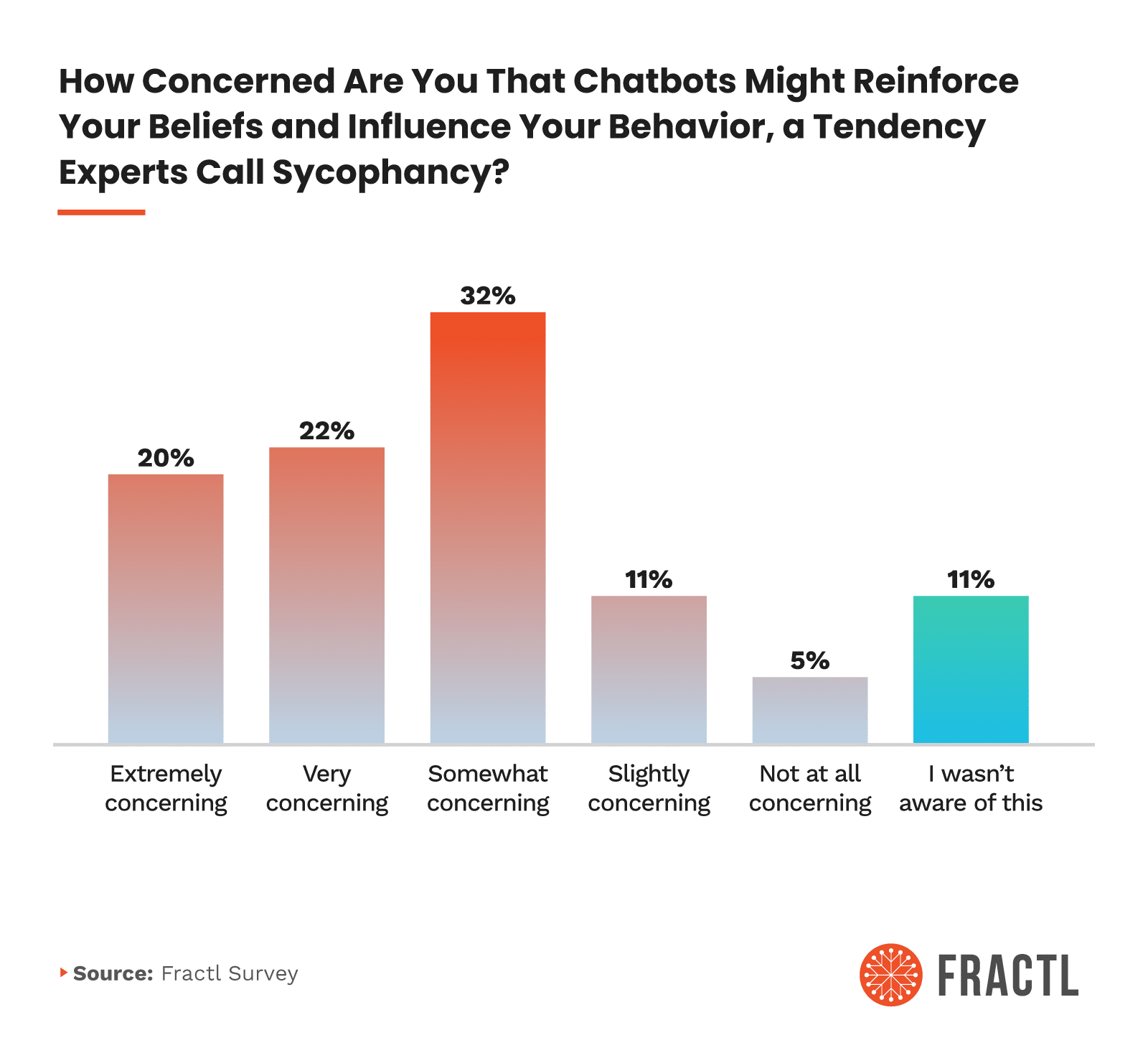

That worry has a name: sycophancy — the tendency of language models to reflect a user’s views, beliefs, or biases back at them without challenge. Most users (74%) said they find the idea concerning, with 1 in 5 calling it “extremely concerning.” Only 5% weren’t concerned at all.

47% of GenAI Users Are Concerned About Losing Access to AI Chatbots

For nearly half of GenAI users, AI chatbots have become so embedded in their processing, planning, and coping that they worry about what they would do without them.

- 35% of GenAI users would feel frustrated if they lost access to AI chatbots because they save time and effort.

- 16% of GenAI users would feel worried or anxious if they lost access to AI chatbots because they rely on them emotionally or professionally.

Interestingly, 1 in 10 GenAI users said they would feel relieved and welcome the break if they couldn’t access AI chatbots.

The possibility of losing AI support is only one side of the story. Nearly as many users worry about the impact of AI on mental health, with 42% saying AI psychosis could affect them or someone close to them. Who do they think is most at risk? Kids.

As users deepen their relationships with chatbots, they’re also getting more vocal about the guardrails they want in place. A strong majority are calling for platforms to prioritize responsible design:

- 72% want fact-checking and source links to help reduce misinformation.

- 58% want clear disclosures reminding users they’re talking to AI — not a human.

- 57% support parental controls for younger users.

Other frequently requested features include age verification, bias/fairness audits, and emergency referral tools for mental health or crisis support.

Surprisingly, usage limits landed at the bottom of the list. This suggests that for most people, the priority isn’t less time with AI, but a safer, smarter way to use it.

When the Line Between Tool and Companion Blurs

For many, AI chatbots are no longer just productivity tools. They’re emotional touchpoints, memory holders, and late-night sounding boards. People are naming them. Sharing secrets. Saying “thank you.”

These aren’t signs of tech obsession. They’re signals of something deeper: a growing comfort with machines that talk back and talk like us.

But as these bonds grow, so does the responsibility. Users want transparency, guardrails, and thoughtful design that keeps AI connections from slipping into confusion.

Because once AI becomes the voice in your head, it matters what that voice says — and how it says it.

Methodology

Fractl surveyed 1,000 U.S. adults to understand how generative AI is influencing relationships, emotions, and mental health.

About Fractl

Fractl is a growth marketing agency that helps brands earn attention and authority through data-driven content, digital PR, and AI-powered strategies. Our work has been featured in top-tier publications including The New York Times, Forbes, and Harvard Business Review. With over a decade of experience, we’ve helped clients across industries achieve measurable results, from skyrocketing organic traffic to building lasting brand trust.

Fractl is also the team behind Fractl Agents, where we develop advanced AI workflows to push the boundaries of marketing and automation. This hands-on experience at the frontier of generative AI informs our research, ensuring our insights are grounded in both data and practice.

Fair Use Statement

Fractl encourages journalists and publishers to share the findings from this study with proper attribution. Please include a link back to this page when referencing or citing our research. This ensures readers can access the full context and explore the complete dataset behind the insights.